This article may reference legacy company names: Continental Mapping, GISinc, or TSG Solutions. These three companies merged in January 2021 to form a new geospatial leader [Axim Geospatial].

AI is prevalent in all aspects of our lives from the technology behind voice recognition software such as Siri on your iPhone to algorithms that evaluate your credit score or evaluate recidivism amongst convicts prior to parole hearings. I’ve been doing a lot of thinking lately about geospatial AI and in particular, AI that assists in the creation of geospatial data from imagery, lidar, video, SAR and other modalities. Here are 5 topics on my mind:

1. Geospatial AI is Already Here – And Changing Rapidly

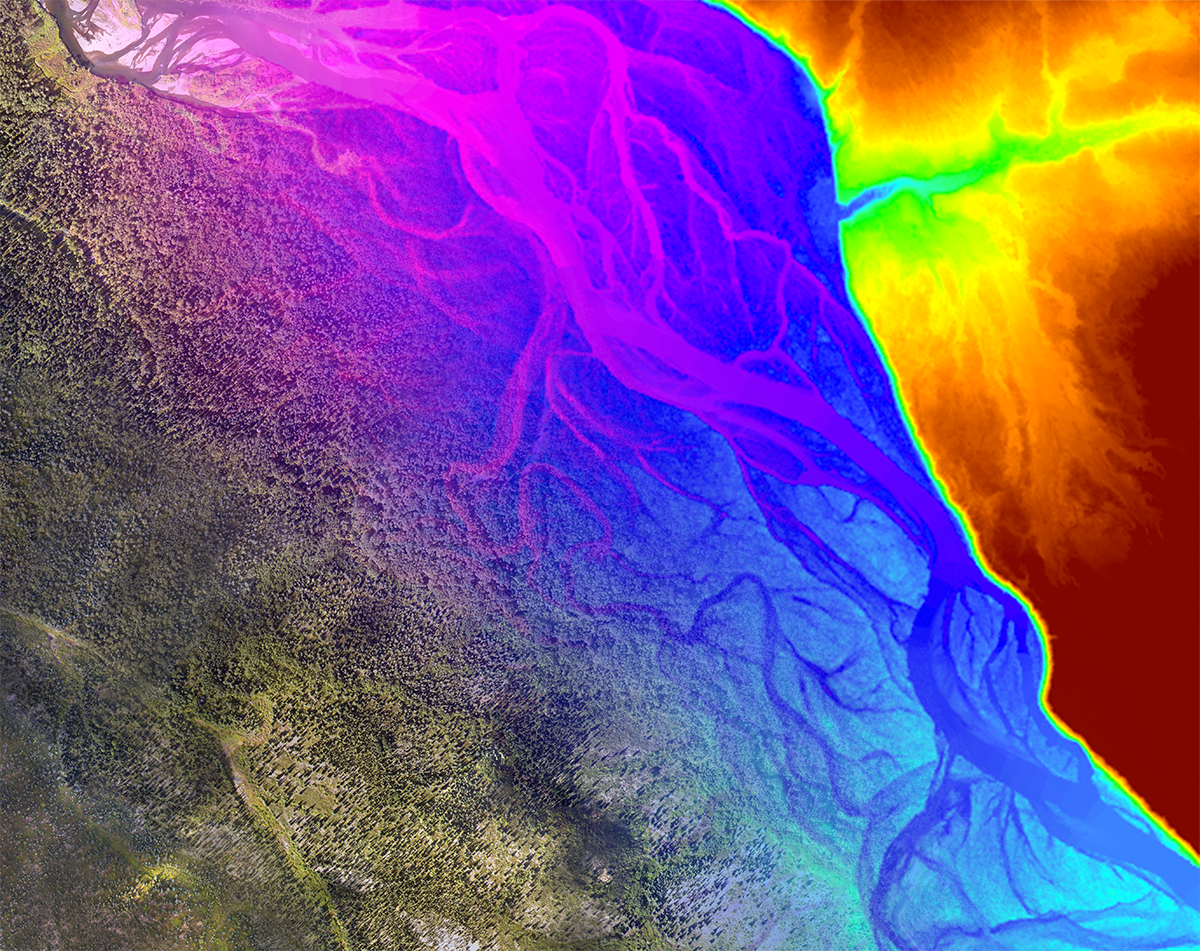

A few years ago, the challenge of identifying a cat or dog in an image was thought to be exceedingly difficult yet within only a few short years, it can now be done using AI with a very high level of success (~98%). As has been often quoted, we are “swimming in sensors and drowning in data” and our industry needs the same ability to extract data from the numerous platforms and sensors at our disposal. A great deal of geospatial AI is developing and we must be aware of it, track its development and use it wisely. Geospatial AI is important for all geospatial professionals whether you are a photogrammetrist, surveyor, engineering, data scientist, CAD technician, database administrator, or GIS Analyst.

2. We Need Purpose-Built Geospatial AI

The end goal in the AI industry is general purpose AI; that which can be built once and used in many different scenarios. Unfortunately, this is quite difficult and remains out of reach for now. Our emphasis is on specific problem sets such as defining building footprints from an image. Much like the challenge of identifying a cat or dog in an image, delineating a building footprint is a unique challenge and quite different than finding fire hydrants or delineating road centerlines or waterbodies. In addition, geography matters to the endeavor as building footprints in a typical America city can be quite different than what you would find in Europe, Asia or South America. Purpose-built AI is essential to its successful use and to achieve that goal, we must train AI for a purpose, document its strengths and weaknesses and audit its results.

3. Trained AI is Starting to Proliferate

Neural networks (NN) are a cornerstone to geospatial AI. To function, NNs need to be trained using known data sets. Much like a child, they must be fed examples and reinforced when correct until enough Iterations are done that the success rates reach an acceptable level. Typical success rates can be well over 60% but can reach up to 99% depending on the complexity of the task. Training datasets are a hot commodity because the data must be structured, labeled and known. Some in the industry are even developing approaches for synthesizing training data given the dynamically changing problem sets and prohibitive cost and time commitments for curating data.

Competitions sponsored by the likes of SpaceNet are driving NN development as well. SpaceNet, a consortium of DigitalGlobe, NVIDIA and CosmiQ Works, offers cash prizes to develop geospatial AI. SpaceNet launches competitions based on a defined problem set with free training data and a published approach for evaluating results. Activities like this and aggressive research in AI have spurned an explosion of publicly available geospatial AI and variety in the NN architectures to make it happen. IN short order the challenge becomes how to audit their success over time and characterize each tools strengths and weaknesses.

4. Geospatial AI Auditing and Quality Assessment is Essential

AI auditing is gaining prominence globally as concerns grow regarding the bias that may be baked into an algorithm. The geospatial industry is no different. Once a NN is trained and put into practice, there is no definitive way to know for sure that the AI is performing at the same level as it did during training. Of course, the NN will provide a confidence interval for features that it finds (e.g., 82% confident that a found feature is a roadside asset) but what about features it outright misses? Also, if the NN can delineate something about the feature it finds (57% confident that it’s a yield sign), how do we know it’s correct? Must we manually validate the entire resulting dataset? That’s already a challenge today with datasets generated by humans and simply isn’t feasible as AI moves us into a time period where we are “swimming in data and drowning in features”.

We are met with a familiar challenge – we need to eliminate human bias in the review of geospatial data and provide consistent metrics that assess all aspects of that data including qualitative issues such as errors of omission, commission, thematic accuracy and attribute accuracy. That has been a focus of our company for some time. We have developed a Quality-As-A-Service product – Data Fitness – that addresses the problem head on. Data Fitness measures the qualitative aspects of geospatial data, provides statistically valid and repeatable quality metrics and helps the user evaluate the fitness of use of that data against their problem set. Products such as Data Fitness help audit geospatial AI and ensure that the data is fit for use by mapping user requirements to a statistically valid and repeatable data quality metric.

Fitness Center: Audit and Analyze the outcome of of Data Fitness

5. We Need to Characterize and Categorize our AI

Purpose-built geospatial AI puts numerous AI tools in your toolbox to address a problem set. Unfortunately, this can lead to the challenge of knowing which AI tool to use. Imagine a scenario of having 8 different algorithms accessible to you that claim to all find building footprints in an RGB imagery. Which one do you use? To make an educated choice, you must have AI metadata accessible to you that characterizes and categorizes the algorithm no different than geospatial data layers in an enterprise system. Geospatial AI metadata can be built without giving away the secrets of the intellectual property and helps users employ the tool correctly.

Take a recent SpaceNet challenge as an example. Their Round 2 challenge was focused on building footprint delineation in 4 locations; Las Vegas, Paris, Shanghai and Khartoum. The contest had numerous submittals (see CosmiQ Works’ blog here) and several clear favorites. However, even the winner had challenges with certain feature classes and sub-types and wildly different success rates in the four different cities around the globe. To successfully use those publicly available NNs, you’d best be aware of their respective strengths and weaknesses. Theoretically, numerous AI variants for a given problem set should be kept around depending on the need and where thta need is. I see a day in the not so distant future where a geospatial professional inventories their AI toolbox for the best fit algorithm to perform a task. One size does not fit all today.

Ironically, similar issues presented themselves in the early days of GIS (way back in the late 70’s and early 80’s) when we debated the best algorithm for geospatial functions such as the traveling salesman or driving directions. There were PhD’s written on this. Much of this is no longer questioned as we’ve iterated through them and found those that best fit our collective needs. Now we just use them. I suspect the same will happen for geospatial AI.

Get Started

We hope this article has provided some value to you! If you ever need additional help, don't hesitate to reach out to our team. Contact us today!